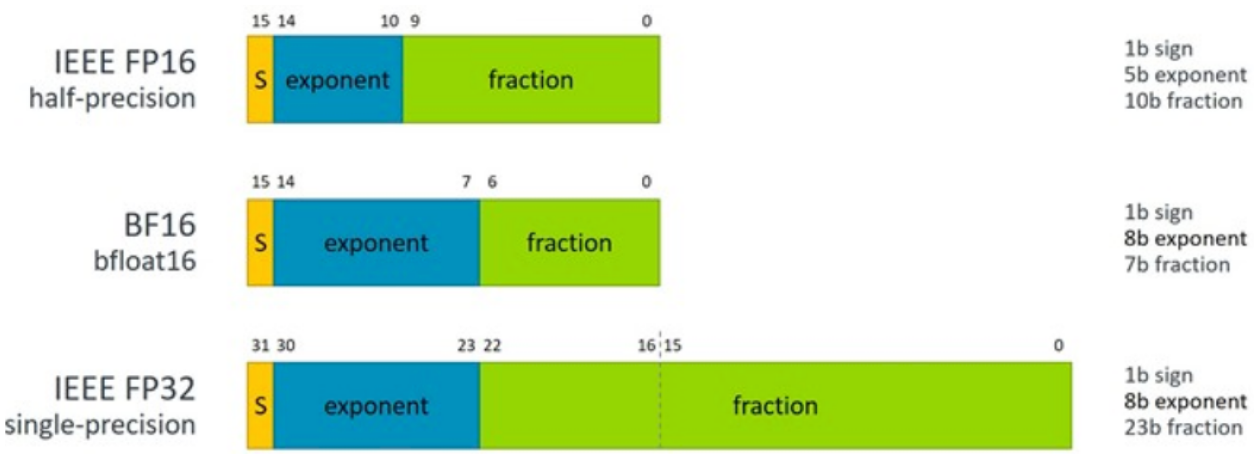

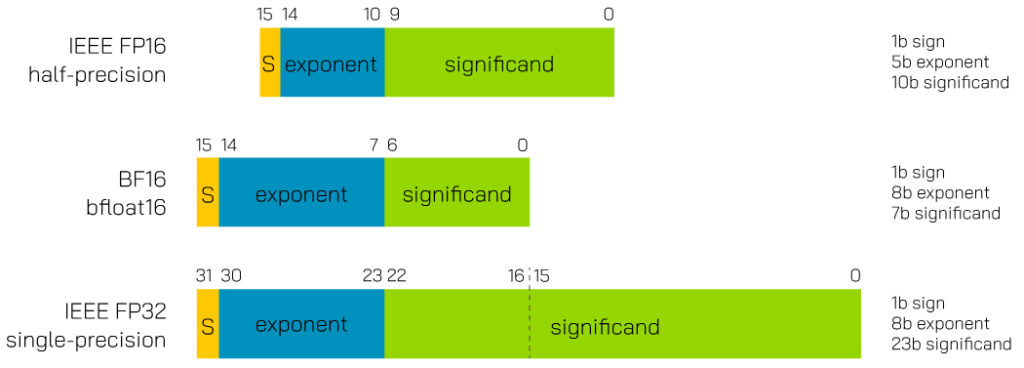

An Energy-Efficient Sparse Deep-Neural-Network Learning Accelerator With Fine-Grained Mixed Precision of FP8–FP16 | Semantic Scholar

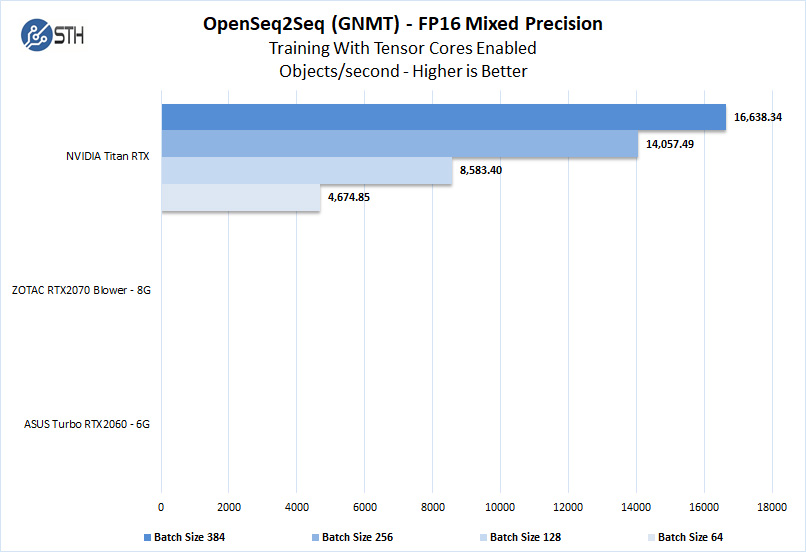

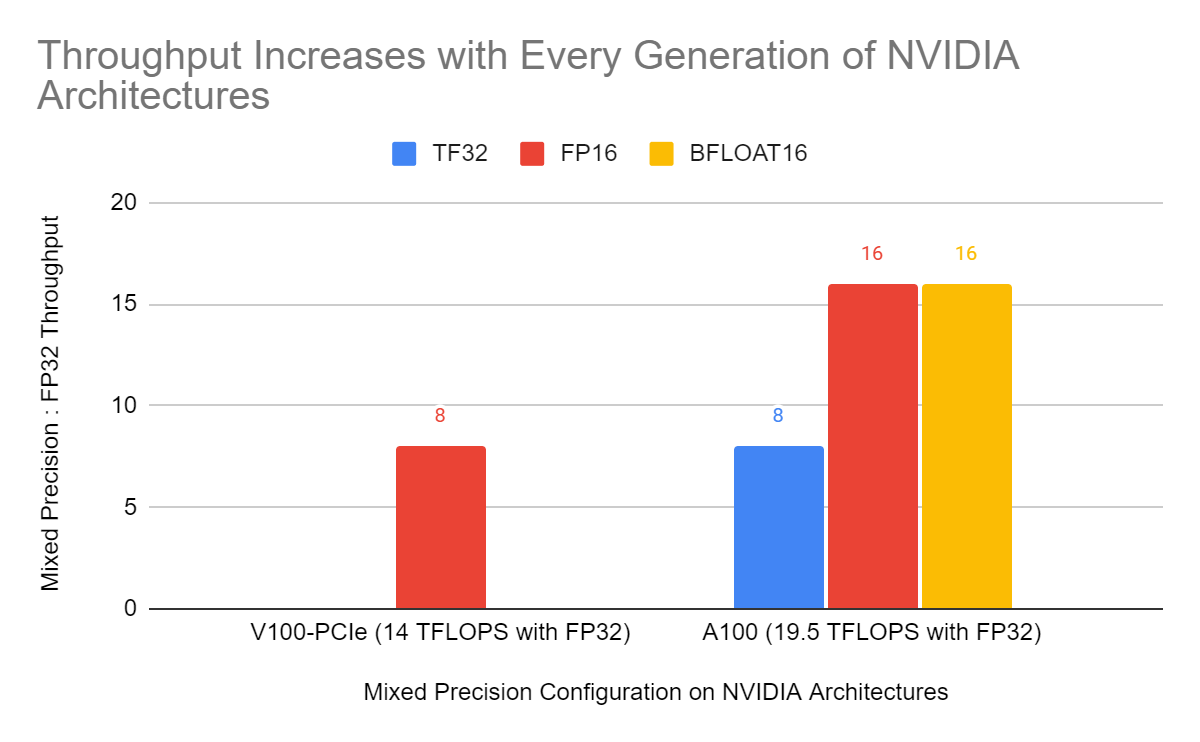

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

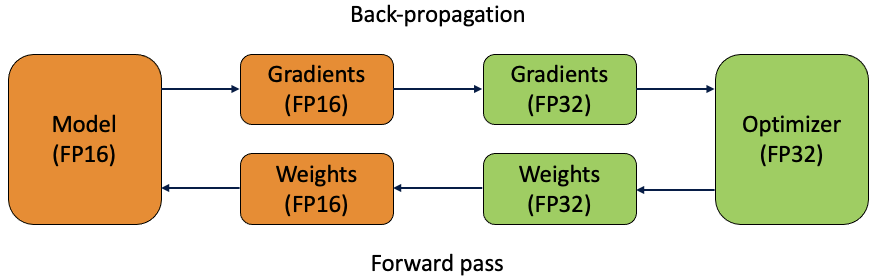

Figure represents comparison of FP16 (half precision floating points)... | Download Scientific Diagram

PyTorch on Twitter: "FP16 is only supported in CUDA, BF16 has support on newer CPUs and TPUs Calling .half() on your network and tensors explicitly casts them to FP16, but not all